Title:EIL-SLAM: Depth-enhanced Edge-based Infrared-LiDAR SLAM

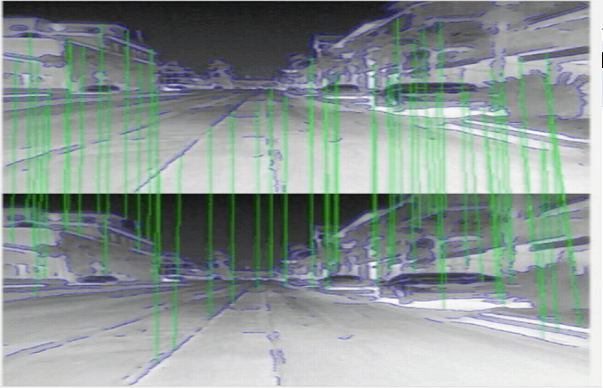

Abstract:Traditional simultaneous localization and mapping (SLAM) approaches that utilize visible cameras or LiDARs frequently fail in dusty, low-textured, or completely dark environments. To address this problem, this study proposes a novel approach by tightly coupling perception data from a thermal infrared camera and a LiDAR based on the advantages of the former. However, applying a thermal infrared camera directly to existing SLAM frameworks is difficult because of the sensor differences. Thus, a new infrared visual odometry method is developed by utilizing edge points as features to ensure the robustness of the state estimation. Furthermore, an edge-based infrared-LiDAR SLAM (EIL-SLAM) framework is developed to generate a dense depth-map for recovering visual scale and to provide real-time pose estimation at the same time throughout the day. An infrared-visual and LiDAR-integrated place recognition method is also introduced to achieve robust loop closure. Finally, several experiments are performed to illustrate the effectiveness of the proposed approach.

Wenqiang Chen, Yu Wang, Haoyao Chen*,Yunhui Liu, “EIL-SLAM: Depth-enhanced Edge-based Infrared-LiDAR SLAM”, Journal of Field Robotics, 39(2), 2021:117-130. (SCI 3.767) https://doi.org/10.1002/rob.22040